It seems like with the current progress in ML models, doing OCR should be an easy task. After all, recognizing handwritten numbers was one of the prime benchmarks for image recognition (MNIST was released in 1994).

Yet, when I try to OCR any of my handwritten notes all I ever get is a jumbled mess of nonsense. Am I missing something, is my handwriting really that atrocious or is it the models?

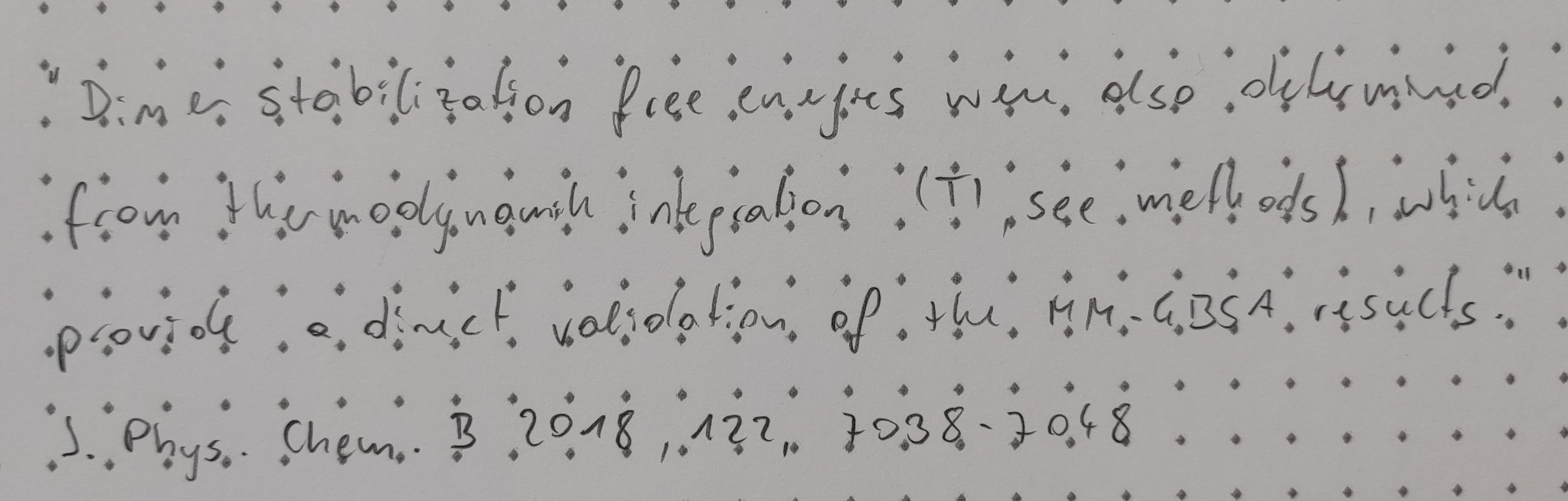

Here’s a quick example, a random passage from a scientific article:

I tried EasyOCR, Tesseract, PPOCR and a few online tools. Only PPOCR was able to correctly identify the numbers and the words “J.” and “Chem.”. The rest is just a random mess of characters.

Edit: thank you all for shitting on my handwriting. That was not asked for, and also not helpful. That sample was intentionally “not nice” but is how I would write a note for myself. (You should see how my notes look like when I don’t need to read them again, lol)

chatGPT can transcribe it perfectly, and also works on a slightly larger sample. Deepseek works ok-ish but made some mistakes, and gemini is apparently not available in my country atm. I guess the context awareness is what makes those models better in transcription, and also why I can read it back without problems.

Well, I haven’t had any issues at exams with my handwriting. But if I write something for myself, and fast then it’ll look somewhat like this. If I’d take my time it’ll be better but that’s not the point.

And that’s totally fine. I didn’t say you’re not good. Perfect writing isn’t necessary, I’m just giving my opinion since you did ask in the post whether you had bad writing.

At the end of the day, a lot of OCR models were mostly trained on typeset text, so it makes sense that a general purpose model wouldn’t be very good at recognizing handwriting that looks non-standard, so to speak.